AI Could Add Smarts to In-Content Shopping

The smarter way to stay on top of broadcasting and cable industry. Sign up below

You are now subscribed

Your newsletter sign-up was successful

Enabling in-video shopping experiences in movies and TV shows has long been a goal for media and entertainment companies, representing another largely untapped way to monetize their content outside of traditional advertising, product placements and distribution deals.

While there have been many attempts to implement in-video shopping, there’s been very little in the way of success — due to clunky user interfaces, interference with the overall viewing experience, or just lack of interest among consumers.

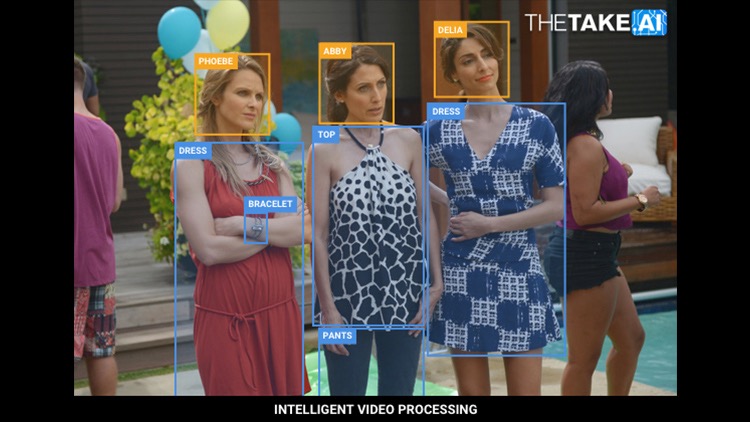

TheTake, a fledgling New York-based company, thinks it’s found the solution: artificial intelligence.

In February, the company launched a white-label offering for media companies that includes machine-learning, neural network technology capable of automatically recognizing products, people and locations in videos. Some of the biggest entertainment companies — Universal Pictures, Sony Pictures, Bravo, Fandango, E! and Comcast — are already using it to open up e-commerce with content.

Tyler Cooper, CEO and co-founder of TheTake, spoke with Next TV contributing editor Chris Tribbey about the results of the AI-enabled tech thus far.

Next TV:How did TheTake get started?

Tyler Cooper: We’ve been at this for a long time, and the business has evolved a great deal since the initial idea. The idea was simple: have a website and app where people could go to find the products they see in movies and TV, so if you’re watching JasonBourne and you like that jacket, you could go to TheTake.com and find it.

The smarter way to stay on top of broadcasting and cable industry. Sign up below

The first evolution in the business occurred when we began finding ways to tie the products into video, like if someone was watching a movie trailer on our website, and they saw something they liked, the information would pop up and help them identify the product quickly. We found that people were engaging with that feature a lot, and realized tying this database to video assets was a promising way to go.

We kept building those tools for the website, but then Universal Pictures came to us about two years ago, and asked if it was possible to bake that feature directly into their next-gen electronic sell-through, digitally distributed movies, and those films enabled with our technology first debuted on Comcast. It’s essentially like interactive Blu-ray, but for digital download. So if you download a movie via Comcast, you have the option for product identification activity.

Organically, we grew from there, with others seeing what we had done with Universal. And that brought us to the first iteration of our B2B offering with Bravo last December, where we began making small clips on BravoTV.com shoppable.

Yet still at that point, a lot of this data entry was essentially manual and we had some really open-source computer solutions that would help pull the information from the videos. It was pretty rudimentary at that point. We decided that to be in the b-to-b world, we needed to be more scalable, and doubled down on the deep learning, artificial intelligence space, with our engineers looking at what the latest was for neural networks, assess what was going on there. Then came up something unique and proprietary, and it works really well, fully automating the process. That’s culminated in the launch of TheTake.AI.

Along the way, we’ve landed deals with E!, Fandango, and others. And we believe the technology has much broader applicability over what it’s being used for today, automating beyond what an actress is wearing and sourcing visually similar products from other retailers. It can be used by retailers for when people are on their ecommerce sites, if they want to surface similar matches, this can do that automatically. We also think our technology holds a lot of promise in natural language processing, comparing songs to other songs.

NTV:Identifying products in movies and TV shows has been a Holy Grail-type quest for the industry for a really long time, and a lot of companies before you have been on the scene and tried this, with varying results. What have you guys done differently to make progress on this front?

TC: If you look at all the different iterations that came before us, we’ve been really fortunate to have our shots come at the same time as this prevalence of digital content consumption, outside of the traditional, linear TV experience, with all these Internet-connected devices capable of delivering instantaneous, interactive experiences. It was a different story 10 years ago, even five years ago.

Now the industry is at a point where there’s so many eyeballs on these digital platforms, the math works out that it’s a profitable business, where before there weren’t enough people watching to enable interactivity like this.

NTV:What’s getting in the way of enabling this live, as a program is airing on TV? Is that attainable?

TC: That’s one of the coolest things. That was unobtainable for us a year ago, just because to do it in real-time, you need an extremely fast interface, or someone entering all the information in real time, curating it manually like we used to. Now that it’s automated, it’s become super effective to do this in real-time, and our next focus is having it set up to manage recognition as video runs, so there’s no lead time.

NTV:Are we including all the products we’d like to? I noticed you guys do locations as well. Are there areas we’d like to expand into that we’re not doing yet?

TC: To be honest, we’re focusing on the low-hanging fruit, where the data shows the most concentrated interest, the clothing and accessories the actors are wearing. In some instances, the homeware has proven of interest as well. And while we haven’t seen much interest in locations, there’s very little cost involved with running this across a variety of categories, and we’re figuring out how to service all that data to a user without creating a paralysis of analysis, freezing people up with too much data. You can’t overwhelm the user.

NTV:And to that point, how much emphasis is put on not interfering with the user experience?

TC: That is our No. 1 rule, frankly. Anything we do, we have to do it in the most non-obtrusive way possible. The way we think about it now, is letting the user know the service is available, they can access the information if they want and that notice then disappears. It’s then up to the user how they want to go in, engage and turn that layer on, if they want to. We know that the primary use case for all these platforms, is people are there to consume the content, and get lost in the stories. We respect that.

And yet we’ve had remarkable success, and seen amazing statistics around engagement. We see about a 40% to 50% unique engagement rate with short-form content enabled with our platform, with those viewers looking at an average of five products each throughout the video. For long-form video, it’s about 20%, with around five or six products each. Stepping back, that’s something we’re really proud of, that when someone watches a full episode of content, 20% are activating our feature and shopping. We weren’t expecting it to be that high, and we think that number will only grow.

NTV:How important it is to have pre-production on the ball ahead of time, have all the information about set decoration and costumes ready to go?

TC: It used to be the name of the game for us, getting that data ahead of time and entered in advance. Now the only manual piece is in quality control, where we go in to make sure everything in a video looks good. There’s really very little manual work done now. The really cool part of machine learning is our network retrains itself in real-time, making its own tweaks as it goes along. It keeps kicking out better and better results, and ultimately, it will eliminate the need for any manual quality control work.

NTV:What might be the different challenges for a movie vs. a TV show, and are there unique challenges among the services, like a streaming provider vs. a network?

TC: There are definitely different levels of interest across different types of content. We’ll see audiences in some shows have dramatically higher levels of engagement, and we’ve learned we can’t guess what will work ahead of time. We used to think we could predict what shows would see people shopping, and in the end, we were surprised what performed well, and that it was difficult to predict what shows or movie viewers would engage with.

On the platform side, there are different challenges with regard to the user experience. If you’re watching a movie or TV show on your laptop, you have a different set of tools in front of you to engage with, a mousepad, a keyboard. It’s a different design for that than for Comcast and a connected TV, where you’re using your remote to engage. We’ve come up with different ways to get around the difficulty inherent with using a remote to engage with something like this.

We’re not partnered with Netflix right now, but let’s say we were and users were watching on different platforms — each platform would need a different user interface. The Netflix app on Apple TV would need a different approach than a laptop app.

NTV:What’s the next big step, not just for you specifically, but for AI-enabled, machine learning interactivity with content?

TC: The horizon for the industry as a whole is just really interesting right now. I think different neural networks are becoming really efficient at completing really complex tasks, and we’re bullish on the machine learning and deep learning space and how they can be applied to entertainment. It’s going to open up a lot of opportunities in automation during the next decade.

And for us in particular, what we’re focusing on now is where else we can apply what we’ve developed in product recognition and product mapping, but also looking at where else we can repurpose the network to create as much value as possible. There’s a lot of non-visual applications for this, and we’re excited to see where it goes.