Supreme Court Appears Troubled by Argument Challenging Section 230

Justices signaled they had issues with implications of plaintiff’s case

The smarter way to stay on top of the multichannel video marketplace. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

The Supreme Court has officially weighed into the white-hot debate over how much Section 230 liability protection the law provides Big Tech for its online content organization and moderation efforts, and — if the justices’ questions are any gauge — that challenge as presented has a high hill to climb.

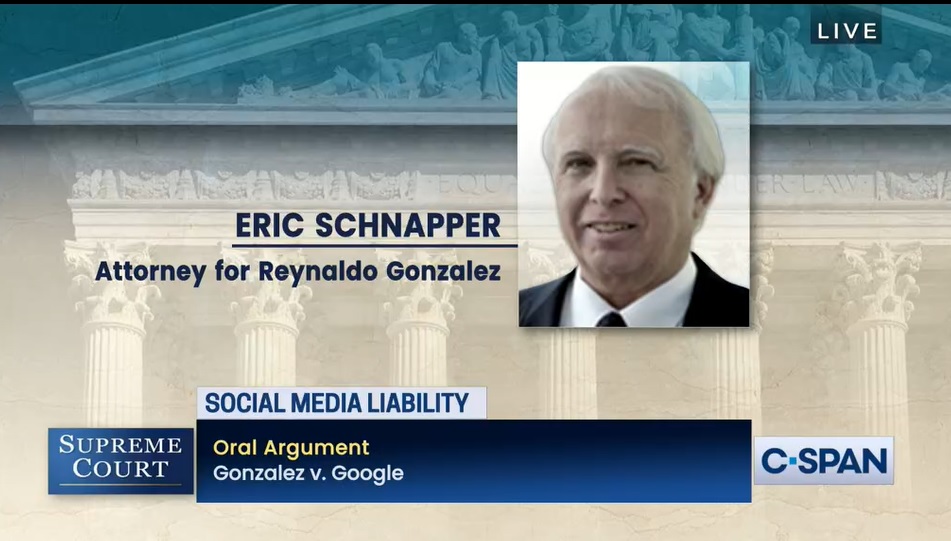

Those indications came during marathon oral arguments during which the justices appeared either confused by the argument of attorney Eric Schnapper, representing the challengers to Section 230, or unpersuaded by his assertion that social media platforms’ showing of thumbnails of third-party ISIS-related material made them liable for that content.

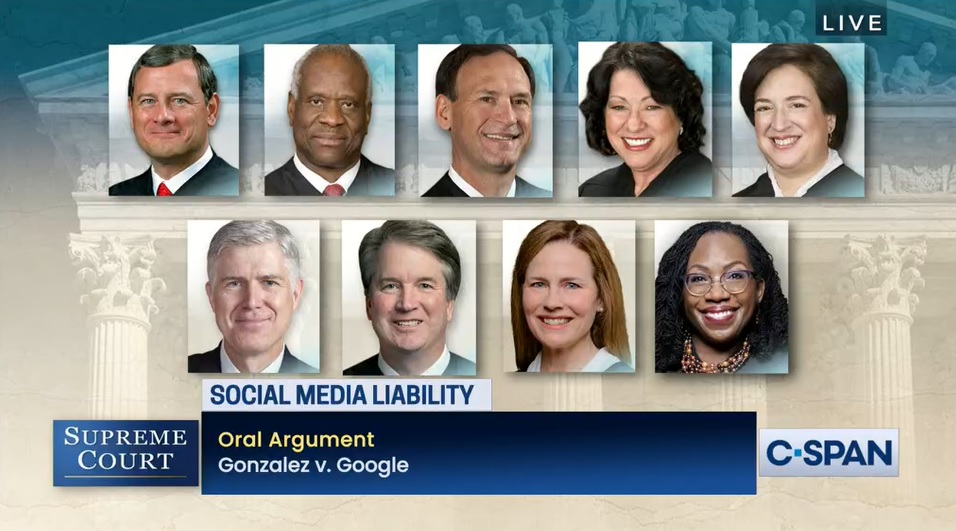

On Tuesday (February 21), the justices heard oral arguments in the case of Gonzalez v. Google, which deals with whether sites like YouTube (the target of the litigation), Facebook or Twitter could be held liable for aiding and abetting terrorists because of how they provided a platform for their online speech, or whether such liability is barred by Section 230 of the Communications Act, which generally provides social-media platforms immunity from liability for third-party speech posted on their sites.

The issue as specifically presented to the court in Gonzalez is this: “Does section 230(c)(l) immunize interactive computer services when they make targeted recommendations of information provided by another information content provider, or only limit the liability of interactive computer services when they engage in traditional editorial functions (such as deciding whether to display or withdraw) with regard to such information?”

The justices, conservative and liberal alike, used “let’s say I disagree with you” and “I am confused” a lot in their questioning of Schnapper — not a good sign for the plaintiffs.

The justices also seemed to suggest Congress needed to step in to decide what Section 230 should mean in a post-algorithm world — the statute dates from 1996 — given the implications for the operation of the internet if sites began being held liable for suggestions, recommendations or “next ups” under the plaintiff’s argument.

They clearly appeared uncomfortable with trying to set the precedent of what kind of content online platforms should be liable for, with Justice Elana Kagan suggesting that they were not the nine most internet-savvy people around.

The smarter way to stay on top of the multichannel video marketplace. Sign up below.

Also: Big Tech Defends Section 230

Justice Neal Gorsuch raised the prospect that the liability shield for a website using neutral tools to provide recommended content, a test suggested by a lower court, might be rendered moot by artificial intelligence (AI), which could arguably be seen as a content creator given what it can now do (write poetry and term papers, for example).

On Wednesday (February 22), the court will also hear a case, Twitter v. Taamneh, involving online terrorist speech. While it does not directly involve Section 230, it could also impact how content can be moderated and potentially how the Gonzalez case will be decided.

Schnapper’s argument, which the justices suggested had morphed somewhat from the case as briefed, was that YouTube thumbnails suggesting ISIS videos to users were not strictly third-party content, but content in which YouTube participated — and that the users had not specifically asked for. Thus, it was not shielded by the law, which protects a social-media platform in its function of supplying an interactive computer service.

That argument landed like a lead balloon with the justices.

The justices asked repeatedly why an algorithm that provided thumbnails of ISIS-related content to users looking for ISIS information was any different from recommending cooking content to folks looking for that, and why that was not a neutral computer service function protected by the statute.

Schnapper said that if YouTube simply provided a series of third-party ISIS videos to that user, that would be protected. But the thumbnails were at least partly YouTube-generated content, which makes it not third party and not protected.

Kagan was concerned that the thumbnail argument would essentially remove the Section 230 protection entirely.

Arguing for YouTube, attorney Lisa Blatt said websites must make choices based on something, but that those choices and recommendations do not constitute aiding and abetting or any conduct that is not protected by Section 230.

She got some tough questions, too, but more in the vein of traditional probing and poking at arguments. For comparison, Schnapper and a Biden administration lawyer who also supported some liability for edge providers were questioned for almost two hours, while the justices were done with Blatt in well under an hour.

Kagan was uncomfortable with Section 230 being taken so far as to protect recommendations of defamatory material, for example, and wondered whether Congress could possibly have meant that protection to be afforded. Justice Ketanji Brown Jackson also questioned whether Congress meant to protect the active promotion of offensive material since the statute provided liability protection for taking down info.

Blatt said that what was offensive to one might not be to another and that the plaintiffs were not arguing that such material should be taken down, but that the problem was with teasing out and recommending information through thumbnails or other means.

She also took issue with the suggestion that algorithms were not in play in 1996, when the statute was passed. She said Congress did know about algorithmic recommendations when it provided Section 230 liability protections.

Blatt pointed out that thumbnails aren't mentioned in the complaint, but that they are embedded third-party speech, not YouTube speech.

One billion hours of video are watched each day on YouTube, she added, so it must be organized somehow.

Also: Court Upholds Edge Protection from Third-Party Liability

The Gonzalez decision will definitely impact how websites do or can do business going forward, and could impact chiefly Republican-controlled state legislatures where content moderation laws cropped up to counter what conservatives has said is a Silicon Valley bias against conservative content.

In 2019, the court declined to hear the appeal of a California Supreme Court decision involving to what degree Section 230 was a shield from liability.

“Section 230 is what allows reputable online sites to respond to bad actors," said Computer & Communications Association president Matt Schruers. CCIA filed a brief with the high court supporting Section 230. “No one wants to see extremist content on digital platforms, and this law Congress enacted is what allows companies to search for and remove dangerous content without the risk that missing a needle in a haystack could lead to bankruptcy.

“If this case alters federal law, companies are likely to respond in one of two ways to protect themselves legally,” Schruers warned. “Companies who could muster the resources would over-moderate everything, while others would throw up their hands and not moderate anything. “Unfortunately, a bad decision from the Supreme Court could drive companies to one of those two extremes in pursuit of legal certainty.”

The Information Technology & Information Foundation was equally alarmed by the prospect of weakening Section 230.

“The Supreme Court’s decision in this case will determine not only the future of Section 230 but the future of the modern Internet,” senior policy analyst Ashley Johnson said. “Significantly weakening Section 230’s liability shield will change the way many online services operate, to the detriment of consumers and free expression online.” ▪️

Contributing editor John Eggerton has been an editor and/or writer on media regulation, legislation and policy for over four decades, including covering the FCC, FTC, Congress, the major media trade associations, and the federal courts. In addition to Multichannel News and Broadcasting + Cable, his work has appeared in Radio World, TV Technology, TV Fax, This Week in Consumer Electronics, Variety and the Encyclopedia Britannica.