New Section 230 Bill Would Target ‘Malicious’ Algorithms

In reaction to Facebook flap, Dems try to take down absolute online immunity

The smarter way to stay on top of the multichannel video marketplace. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

In the wake of the revelations by a Facebook whistleblower and allegations about the company’s internal research about the impact of its platforms on young people, House Energy & Commerce Committee leaders have proposed a bill that targets “reckless” use of algorithms by limiting Section 230.

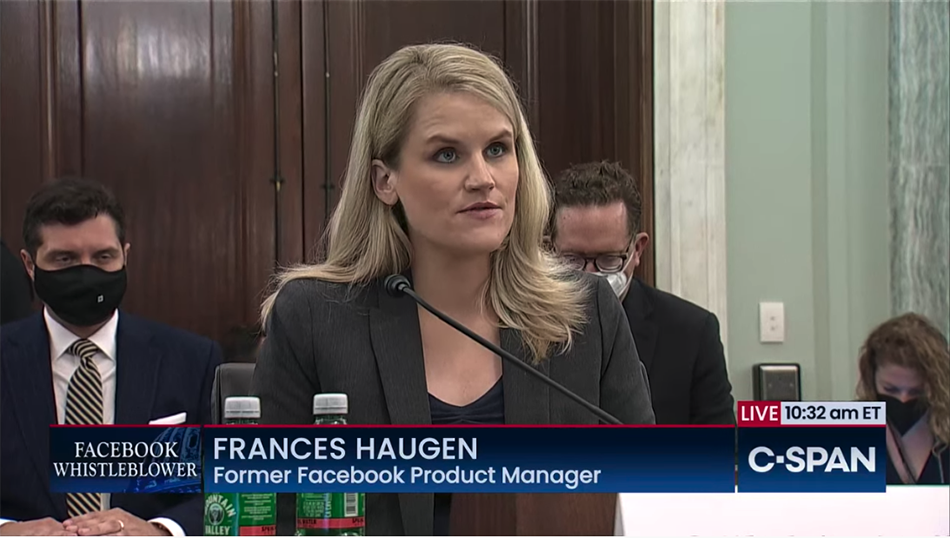

Former Facebook staffer Frances Haugen testified about the documents before the committee at a heated hearing that prompted legislators to suggest it was Big Tech‘s Big Tobacco moment.

Section 230 is the add-on to the 1996 Communications Decency Act that provides web platforms immunity from civil liability for what appears on their platforms.

Also Read: Rep. Eshoo Pushes for Subpoena of Facebook Documents

While there has been bipartisan criticism of Facebook, the legislators unveiling the bill are all Democrats: Energy and Commerce Committee chairman Frank Pallone Jr. (D-N.J.), Communications Subcommittee chairman Mike Doyle (D-Pa.) and Consumer Protection Subcommittee chair Jan Schakowsky (D-Ill.).

The bill, the Justice Against Malicious Algorithms Act, would remove absolute immunity for an edge provider that “knowingly or recklessly uses an algorithm or other technology to recommend content that materially contributes to physical or severe emotional injury.”

The bill does not apply to “small” online platforms, which are defined as fewer than five million unique monthly visitors, or to algorithms or search features that aren't based on personalization, or to infrastructure like Web hosting or data transfer or storage.

The big issue with Facebook‘s internal research was that it showed that some teens said Instagram made them feel worse about themselves and even contributed to thoughts of suicide. Facebook countered that the research found that a majority of kids did not feel that way, and that the info helped them take actions to help those who did.

But Congress has not been assuaged, arguing that Facebook has not done nearly enough, while keeping that research under wraps and downplaying the negative results.

“Social media platforms like Facebook continue to actively amplify content that endangers our families, promotes conspiracy theories, and incites extremism to generate more clicks and ad dollars,” Pallone said. “These platforms are not passive bystanders — they are knowingly choosing profits over people, and our country is paying the price. The time for self-regulation is over, and this bill holds them accountable. Designing personalized algorithms that promote extremism, disinformation, and harmful content is a conscious choice, and platforms should have to answer for it.”

Added Doyle: “We finally have proof that some social media platforms pursue profit at the expense of the public good, so it’s time to change their incentives, and that’s exactly what the Justice Against Malicious Algorithms Act would do. Under this bill, Section 230 would no longer fully protect social media platforms from all responsibility for the harm they do to our society. It’s my hope that by making it possible to hold social media platforms accountable for the harm they cause, we can help optimize the internet’s impact on our society.”

The smarter way to stay on top of the multichannel video marketplace. Sign up below.

Contributing editor John Eggerton has been an editor and/or writer on media regulation, legislation and policy for over four decades, including covering the FCC, FTC, Congress, the major media trade associations, and the federal courts. In addition to Multichannel News and Broadcasting + Cable, his work has appeared in Radio World, TV Technology, TV Fax, This Week in Consumer Electronics, Variety and the Encyclopedia Britannica.