House Dems: Online Disinformation Has Deadly Consequences

The smarter way to stay on top of the multichannel video marketplace. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

The House Communications Subcommittee and Consumer Protection Subcommittee teamed up to tackle the online content moderation issue Wednesday (June 24), suggesting it was one, literally, of life and death and laying much of the blame at the digital doorsteps of Facebook, Google and Twitter.

Related: DOJ Proposes Sec. 230 Reforms

That was in a hearing entitled "A Country in Crisis: How Disinformation Online Is Dividing the Nation."

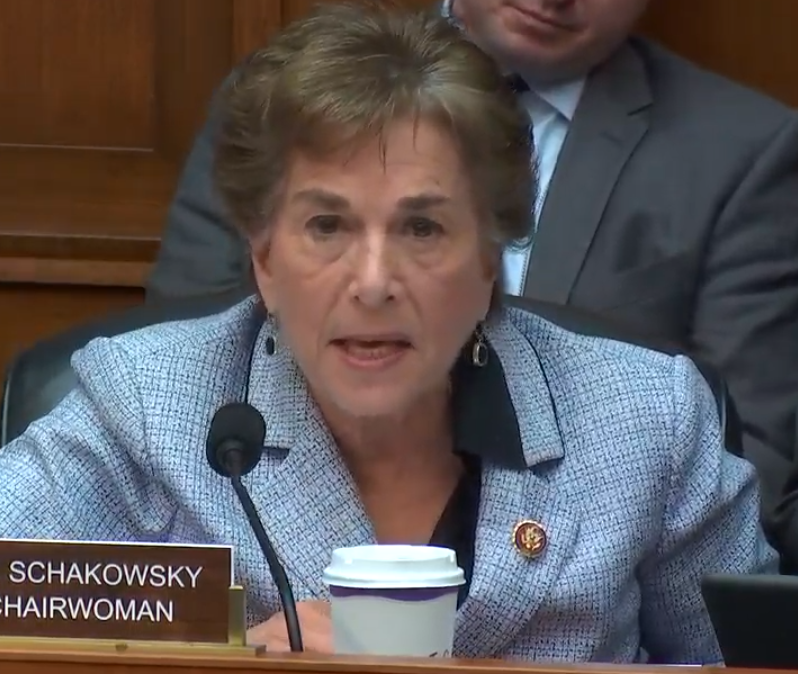

Consumer Protection Subcommittee chair Jan Schakowsky (D-Ill.) said that "the American people are dying and suffering as a result of online disinformation" and that since the courts and industry have not acted to reign it in, instead profiting in it, Congress must step in.

Communications Subcommittee chair Mike Doyle (D-Pa.) said that the pandemic and social unrest had put an exclamation point on the concern over "the flood of disinformation online - principally distributed by social media companies - and the dangerous and divisive impact it is having on our nation."

How dangerous? He said that the world is facing "an unprecedented tsunami of disinformation that threatens to devastate our country and the world."

"Social media companies are dividing the nation by driving misinformation, "the largest among them being Facebook, YouTube, and Twitter," said Doyle.

The smarter way to stay on top of the multichannel video marketplace. Sign up below.

"When Congress enacted Section 230 of the Communications Decency Act in 1996, this provision provided online companies with a sword and a shield to address concerns about content moderation and a website’s liability for hosting third party content," he said.

The section provides an exemption for civil liability for a social media platform's treatment of third party content, either its decision to moderate that content, or not to moderate it.

But Doyle suggested that exemption has allowed for the spread of dangerous information.

"[W]hile a number of websites have used 230 for years to remove sexually explicit and overly violent content, they have failed to act to curtail the spread of disinformation. Instead they have built systems to spread it at scale and to monetize the way it confirms our implicit biases."

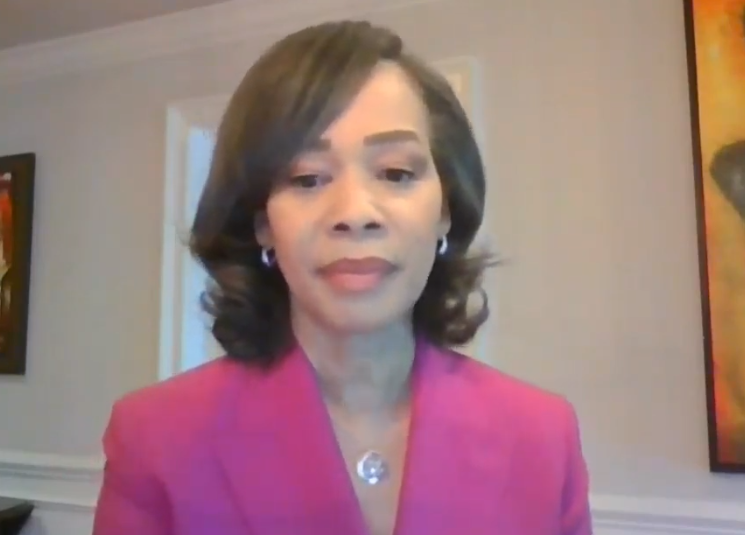

Brandi Collins-Dexter, senior campaign director, Color of Change, and a witness at the hearing, definitely agrees. "when companies say they are not willing to remove certain things, what they are really saying is that addressing white nationalism, disinformation and anti-blackness simply don't rise to a level of urgency for them," she told the committee.

She said tech companies "have repeatedly failed to uphold societal values like transparency, accountability and fairness."

Schakowsky was not less concerned by Big Tech.

"The harms associated with misinformation and disinformation continue to fall disproportionately on communities of color, who already suffer worse outcomes from COVID-19," she said. "All the while, the President himself is continually spreading dangerous disinformation that Big Tech is all too eager to profit from."

Schakowsky took the sword and shield analogy and turned it on Silicon Valley. "Big Tech uses it as a shield to protect itself from liability when it fails to protect consumers or harms public health, and uses it as a sword to intimidate cities and states when they consider legislation..."

Rep. Lisa Blunt Rochester (D-Del.) said that social media companies have not done enough to control hate speech and disinformation.

She said that amidst a pandemic, record unemployment and Americans demanding action on police violence and racial justice, "social media have failed to prevent white nationalists, scammers, and other opportunists from using their platforms to exacerbate these crisis."

She said Facebook had been the most irresponsible platform and that it, and other social media, had a responsibility to "get their act together" and to be "a part of the solution rather than the problem."

Contributing editor John Eggerton has been an editor and/or writer on media regulation, legislation and policy for over four decades, including covering the FCC, FTC, Congress, the major media trade associations, and the federal courts. In addition to Multichannel News and Broadcasting + Cable, his work has appeared in Radio World, TV Technology, TV Fax, This Week in Consumer Electronics, Variety and the Encyclopedia Britannica.